La science des données a pour but d’extraire de l’information ou des connaissances des données. La nouveauté de cette discipline tient principalement à la quantité et à l’hétérogénéité des données collectées. D’une estimation de 1021 octets collectés en 2010, nous sommes passés à 2,8.1021 en 2012 avec une estimation de 40.1021 en 2020 ; rappelons que le nombre d’étoiles dans l’Univers est estimé à 1024. Les techniques classiques de stockage et d’analyse des données évoluent pour s’adapter à ces volumes; elles s’appuient principalement sur les mathématiques, la Statistique, l’informatique et la visualisation des données.

La science des données a pour but d’extraire de l’information ou des connaissances des données. La nouveauté de cette discipline tient principalement à la quantité et à l’hétérogénéité des données collectées. D’une estimation de 1021 octets collectés en 2010, nous sommes passés à 2,8.1021 en 2012 avec une estimation de 40.1021 en 2020 ; rappelons que le nombre d’étoiles dans l’Univers est estimé à 1024. Les techniques classiques de stockage et d’analyse des données évoluent pour s’adapter à ces volumes; elles s’appuient principalement sur les mathématiques, la Statistique, l’informatique et la visualisation des données.

La croissance des données collectées n’épargne pas l’aéronautique et le transport aérien en particulier; ces données pouvant être des données temps réels communiquées au sol par les aéronefs, mais aussi des données propres au fonctionnement d’un aéroport par exemple, ou des données économiques. Ces évolutions nécessitent la mise en œuvre de nouveaux programmes de recherches mais aussi des actions dans la formation des futurs ingénieurs et techniciens de l’aviation civile.

La croissance des données collectées n’épargne pas l’aéronautique et le transport aérien en particulier; ces données pouvant être des données temps réels communiquées au sol par les aéronefs, mais aussi des données propres au fonctionnement d’un aéroport par exemple, ou des données économiques. Ces évolutions nécessitent la mise en œuvre de nouveaux programmes de recherches mais aussi des actions dans la formation des futurs ingénieurs et techniciens de l’aviation civile.

C’est dans cette volonté que l’ENAC a créé l’équipe DEVI afin de participer, dans le contexte de l’aérien, à l’avancement des connaissances en science des données mais aussi à l’élaboration de modèles économiques permettant d’analyser les comportements des agents, à l’estimation et au test de ces modèles au moyen des outils fournis par l’économétrie ou la Statistique et à l’interprétation et la visualisation de données pour la production de connaissances et la prise de décision.

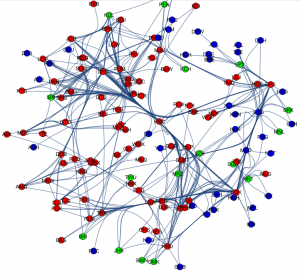

Cette équipe s’appuie sur des compétences telles que la Statistique, l’Économie et la Visualisation Interactive de données. Ceci exige donc une expertise dans un vaste éventail de domaines scientifiques allant de la recherche sur les méthodes (apprentissage automatique, fouille de données, visualisation de données, base de données) à la maîtrise du domaine scientifique dont sont issues les données.

Cette équipe s’appuie sur des compétences telles que la Statistique, l’Économie et la Visualisation Interactive de données. Ceci exige donc une expertise dans un vaste éventail de domaines scientifiques allant de la recherche sur les méthodes (apprentissage automatique, fouille de données, visualisation de données, base de données) à la maîtrise du domaine scientifique dont sont issues les données.

Dans ce contexte, l’équipe regroupera trois axes : statistique, économie et visualisation interactive de données. Ces activités de recherche s’appuieront sur des spécialistes de la gestion de bases de données, en particulier pour la base de données « ENAC Air Transport Data ». D’autre part, nous avons pour volonté d’offrir un espace de stockage pour les différentes bases de données déjà utilisées dans les projets de l’ENAC, ceci pour en faciliter leur utilisation, mais aussi permettre des associations ou croisement afin d’enrichir l’extraction des connaissances.

Dans ce contexte, l’équipe regroupera trois axes : statistique, économie et visualisation interactive de données. Ces activités de recherche s’appuieront sur des spécialistes de la gestion de bases de données, en particulier pour la base de données « ENAC Air Transport Data ». D’autre part, nous avons pour volonté d’offrir un espace de stockage pour les différentes bases de données déjà utilisées dans les projets de l’ENAC, ceci pour en faciliter leur utilisation, mais aussi permettre des associations ou croisement afin d’enrichir l’extraction des connaissances.

Une forte implication dans l’enseignement fait aussi partie des objectifs de l’équipe afin de préparer les élèves de l’ENAC à la recherche et à l’insertion en entreprise en développant leurs compétences dans les domaines couverts par l’équipe.